McShane & Wyner's conclusion:

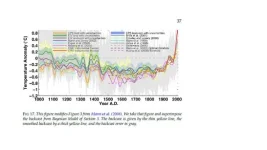

6. Conclusion. Research on multi-proxy temperature reconstructions of theearth’s temperature is now entering its second decade. While the literature is large,A STATISTICAL ANALYSIS OF MULTIPLE TEMPERATURE PROXIES 41there has been very little collaboration with university-level, professional statisticians[Wegman, Scott and Said (2006), Wegman (2006)]. Our paper is an effort toapply some modern statistical methods to these problems. While our results agreewith the climate scientists findings in some respects, our methods of estimatingmodel uncertainty and accuracy are in sharp disagreement.On the one hand, we conclude unequivocally that the evidence for a “longhandled”hockey stick (where the shaft of the hockey stick extends to the year1000 AD) is lacking in the data. The fundamental problem is that there is a limitedamount of proxy data which dates back to 1000 AD; what is available is weaklypredictive of global annual temperature. Our backcasting methods, which trackquite closely the methods applied most recently in Mann (2008) to the same data,are unable to catch the sharp run up in temperatures recorded in the 1990s, evenin-sample. As can be seen in Figure 15, our estimate of the run up in temperaturein the 1990s has a much smaller slope than the actual temperature series. Furthermore,the lower frame of Figure 18 clearly reveals that the proxy model is not at allable to track the high gradient segment. Consequently, the long flat handle of thehockey stick is best understood to be a feature of regression and less a reflection ofour knowledge of the truth. Nevertheless, the temperatures of the last few decadeshave been relatively warm compared to many of the 1000-year temperature curvessampled from the posterior distribution of our model.Our main contribution is our efforts to seriously grapple with the uncertaintyinvolved in paleoclimatological reconstructions. Regression of high-dimensionaltime series is always a complex problem with many traps. In our case, the particularchallenges include (i) a short sequence of training data, (ii) more predictors thanobservations, (iii) a very weak signal, and (iv) response and predictor variableswhich are both strongly autocorrelated. The final point is particularly troublesome:since the data is not easily modeled by a simple autoregressive process, it followsthat the number of truly independent observations (i.e., the effective sample size) may be just too small for accurate reconstruction.Climate scientists have greatly underestimated the uncertainty of proxy-basedreconstructions and hence have been overconfident in their models. We have shownthat time dependence in the temperature series is sufficiently strong to permitcomplex sequences of random numbers to forecast out-of-sample reasonably wellfairly frequently (see Figures 9 and 10). Furthermore, even proxy-based modelswith approximately the same amount of reconstructive skill (Figures 11–13), producestrikingly dissimilar historical backcasts (Figure 14); some of these look likehockey sticks but most do not.Natural climate variability is not well understood and is probably quite large. Itis not clear that the proxies currently used to predict temperature are even predictiveof it at the scale of several decades let alone over many centuries. Nonetheless,paleoclimatoligical reconstructions constitute only one source of evidence in theAGW debate.42 B. B. MCSHANE AND A. J. WYNEROur work stands entirely on the shoulders of those environmental scientists wholabored untold years to assemble the vast network of natural proxies. Althoughwe assume the reliability of their data for our purposes here, there still remains aconsiderable number of outstanding questions that can only be answered with afree and open inquiry and a great deal of replication.