I'm still reading on the thread but some early thoughts to page 1 posts

To be honest and brutal about this - Design, Artist and Illustrator jobs in the west have been dripping away to the far East for the last 15 years because of websites like fiverr, 99 designs etc etc. It was bad enough in the 80's and 90's when you took a paycut to go get a job in London in those areas but competing with an illustrator in Malaysia who will do the same work for a 20th of my hourly rate just doesn't work for most designers and artists.

Personally, I gave up on ideas last year when AI really burst the bubble but now I am learning to use AI as a way to quickly produce mock-ups. All creative AI is not bad, one example was shown to me this week "invideo.ai" can take recordings of MY face and gestures and then help me produce explainer videos that need the minimum number of retakes and if I am especially camera shy - I only neeed to be in front of a camera once and the AI can output hundreds of videos of me explaining and demonstrating for technical support videos.

That is AI being useful. In terms of 2D art and animated video though - it's not where I once watched ex Disney animator Aaron Blaise hoping that AI could take his 1000's of drawings and then accurately colour them up for him and help him work faster. This video is from 2023 and AI isn't quite where he wanted it yet.

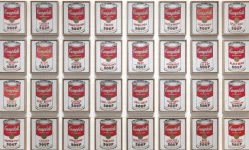

The other solution I envisaged was for Artists to work on physical texture and touch in their work. AI art generally produces digital output and I felt that Artists needed to go into physical collage and texture in their work to differentiate themselves from "AI art" such as in below:

or

however, eventually AI will be hooked up to 3D printers and artists would have to move on again.

I think for skilled jewellers and the like, the situation is better. You "could" get Ai to design you some jewellery and then 3D print it but Jewellery is also the skill of strengthening and creating strength and structure in a piece of 3D metal. A cast piece of jewellery might look good but drop it once and watch it shatter whereas a jewellery piece that has been heat treated and

drawn will survive mistreatment and accidents and that is why jewellers are still prized for their work and skill.

Not every painter works that way whereas AI always works the same way. The word "landscape" is also open to interpretation and Jackson Pollock certainly chose not to be a slave to physical research in his time.

The classic story in the UK is that the Rolling Stones and Beatles became popular by playing African American Blues music

badly (or at least, nothing like their heroes could) and their interpretation was different enough that white American kids could enjoy and buy it whereas they wouldn't ever listen to or buy genuine blues music.

An artist who copies and produces exactly the same work and style as another is called a "forger" and their work can often be called forgeries. The most expensive AI Art sites can be akin to producing forgery - a client can ask for a painting as if done by Picasso and get that result. The AI is not going to change or tweak the result to suit its personal tastes.

So, I could now ask a music AI to create a Howling Wolf or Presley track and it would do it pretty well - it's not going to create any new kind of music by doing that or draw in a new audience. That's where the human trying to play or create something and failing in a creative way can make something new and not seen before.

Here's an AI "new" Elvis Presley track - only 73 views. Now imagine how many if Elvis himself was here to make the same music.